Implementation Details

Implemented in four steps:

- Find all streets with coordinates for a specific area

- Image generation with metadata of the location for each street (multiple images per street)

- Image processing & road sign detection

- Data evaluation & crunching

- Visualization of results

Step 1: Find all streets with coordinates for a specific area

Two different approaches could be used

- One approach would be to start at a random street within the defined area and algorithmically walk into every possible street, similar to a project (Maze Generation) which I worked on. Here the Google Maps API would be used to get every possible directions and locations to move to.

- Specific a bounding box with coordinates for the area and use Overpass API to get all street information.

Approach 2. has been used because a solution for this already exists and I can just use the Overpass API, and Google Maps API is not free, so it would get pretty expensive for large areas.

Step 2: Image generation with metadata

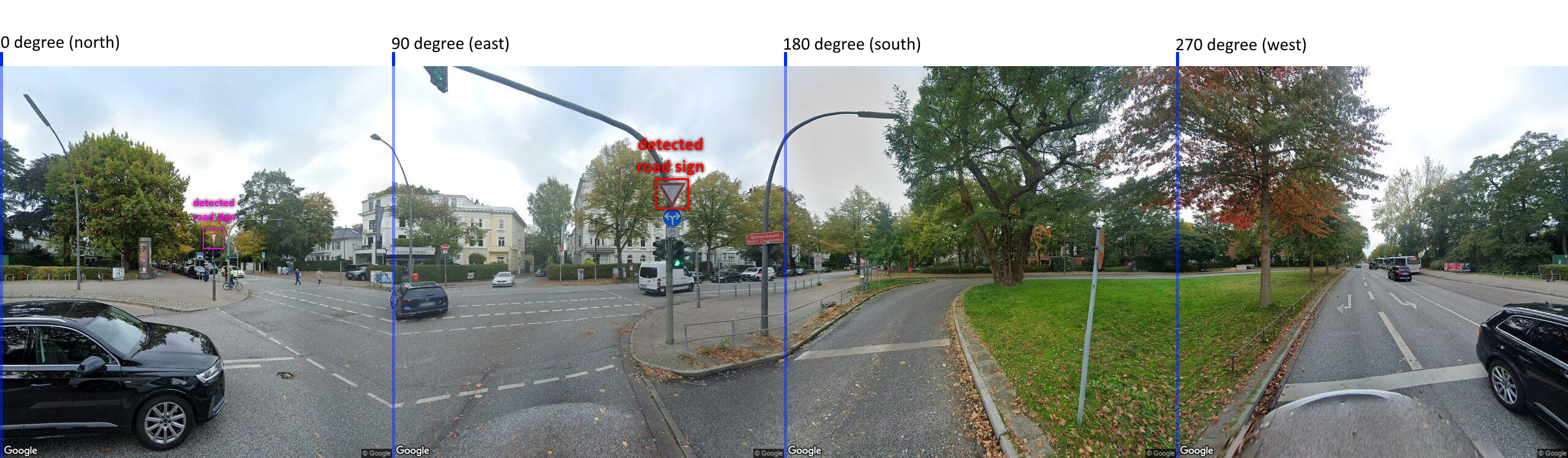

Use Google Street View API to generate a 360 degree panorama picture with metadata for the street name, country, city, etc.

It is up for debate whether or not street name, country, etc. are even necessary.

Step 3: Image processing & road sign detection

Each image has to be processed and following informations have to be gathered:

- Classification of each road sign

- Bounding Boxes of each road sign

- (preferably) Height of the road sign from the ground

AI Models to chooe from:

- YOLO

- Detectron2

Step 4: Data evaluation & crunching

With the images and their metadata there is a lot data which could help figuring out each unique road sign and its (more or less) exact location, for this I was thinking about following approaches:

- Group by Streetname + Streetnumber and evaluate all images for the given street, only take the image where the street sign is closest to the actual image (biggest in size on the picture).

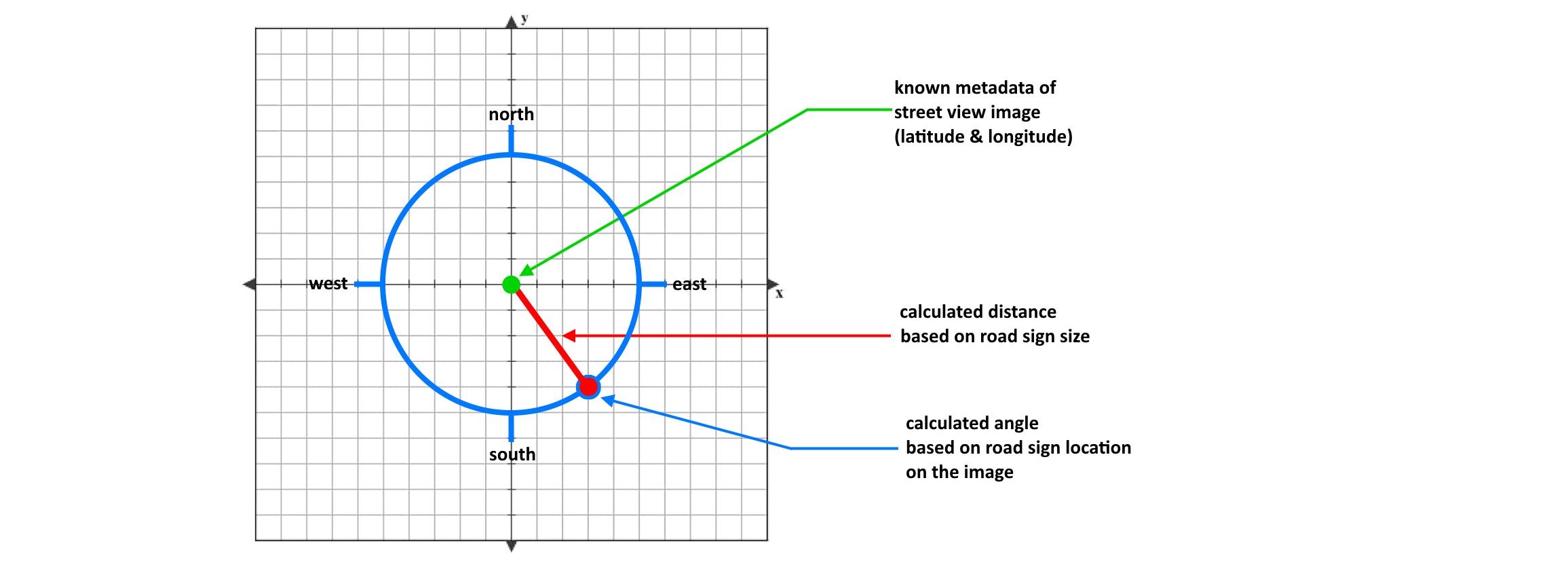

- Calculate angle and distance based on the image. As we have 360 degree panorama pictures, and the left most side is always directly facing north. With this we can

- Theoretically images of the same sign could be grouped together and with this information the road sign location could be trinagulated (requries at least 3 images per sign).

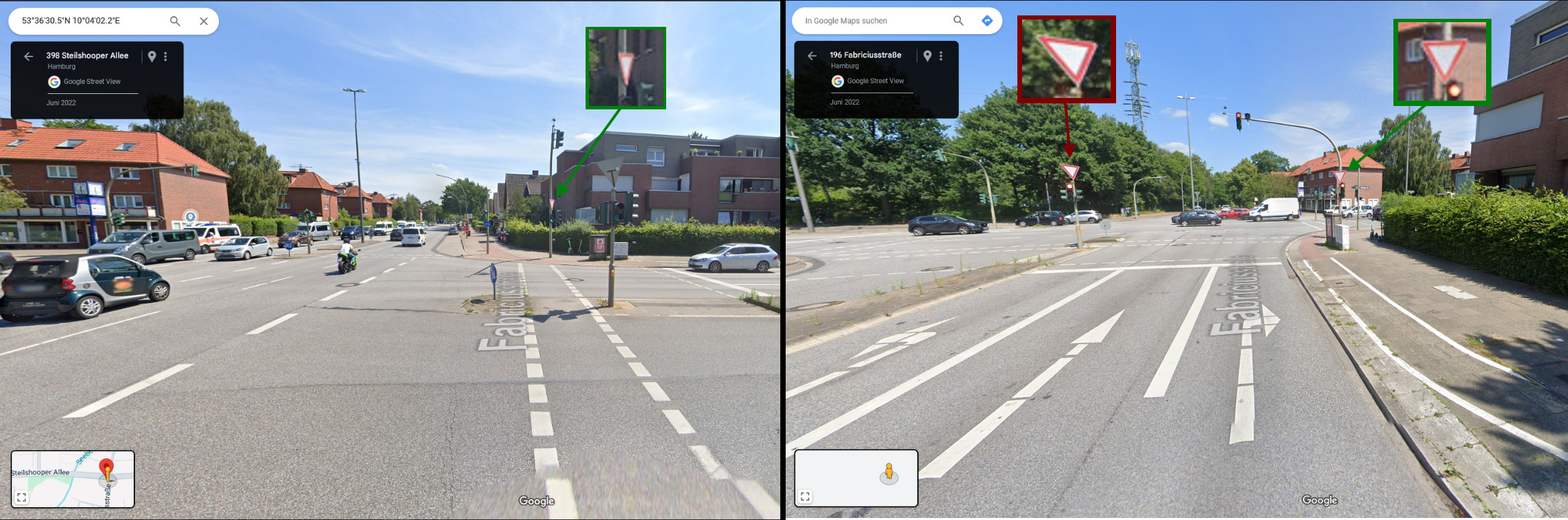

The first approach however would not work for two symbolically equal road signs next to each other, or where a road sign is seen from two different streets as seen in this example:

The green road sign is the exact same, however the two images are from different angles and completely different streets (street name + number in top left of each picture). This makes this approach not feasible.

Also differentiating between the red-outlined and green-outlined road signs would be difficult when crunching the data like described previously.

Using the second approach we can calculate each individual road sign for each picture and we get pretty accurate results for the location of the road sign. It is also not a problem that we count a single sign multiple times from different images, as we can group road signs that are very close to each other within a small threshold (~0.5 meter for example) and average the latitude and longitude and count the result as a unique road sign.

Approach 2 has been chosen to be used in this project, approach 3 could theoretically result in more precise data however but would be way more difficult to implement and more computationally intensive, for only very little upside, as approach two comes close to triangulating by averaging out the results of multiple images.

Step 5: Data Visualization

The python package folium can be used to generate a html file of a map with a visualization of all road signs from the result of the previus step

Sign Position Detection

Known Problems / Difficulties:

Image Processing

Google Maps provides two main image types for road sign detection: 360-degree panoramic views and standard 90-degree shots. Panoramic images are distorted due to spherical projection, while 90-degree images offer a more natural perspective.

Overall, panoramic images tend to produce more precise detections, likely due to their wider coverage. However, this isn't always the case and distortion can sometimes result in worse accuracy than 90-degree images.

Google Maps Pictures can be outdated

Resulting data would not represent road signs that are on the streets today or would show street signs which are no longer there.

AI could falsely detect or classify road signs, or not detect them at all

Test results of the AI will be given once a model has been implemented and trained.

Very expensive for large areas due to Google API costs

- 4 calls per image (each direction is one image)

- 1 call per latitude and longitude pair from OverpassAPI to snap to a road in google maps

- 1 additional call per image for location metadata (streetname, city, etc.)

Written: 2024-10-05